Section: New Results

An Approach to Improve Multi-object Tracker Quality Using Discriminative Appearances and Motion Model Descriptor

Participants : Thi Lan Anh Nguyen, Duc Phu Chau, François Brémond.

Keywords: Tracklet fusion, Multi-object tracking

Many recent approaches have been proposed to track multi-objects in a video. However, the quality of trackers is remarkably effected by video content. In the state of the art, several algorithms are proposed to handle this issue. The approaches in [39] and [64] propose methods which compute online or learn descriptor weights during tracking process. These algorithms adapt the tracking to the scene variations but are less effective when mis-detection occurs in a long period of time. Inversely, the algorithms in [59] and [58] can recover a long-term mis-detection by fusing tracklets. However, the descriptor weights in these tracklet fusion algorithms are fixed in the whole video. Furthermore, above algorithms track objects based on object appearance which is not reliable enough when objects look similar to each other.

In order to overcome mentioned issues, the proposed approach brings three contributions: (1) appearance descriptors and motion model combination, (2) online discriminative descriptor weight computation and (3) discriminative descriptors based tracklet fusion. In particular, the appearance of one object can be discriminative with other objects in this scene but can be similar with other objects in another scene. Therefore, tracking objects based on only object appearance is less effective. In order to improve tracker quality, assuming that objects move with constant velocity, this approach firstly combines a constant velocity model from [70] and other appearance descriptors. Continuously, discriminative descriptor weights are computed online to adapt the tracking to each video scene. The more a descriptor discriminates one tracklet over other tracklets, the higher its weight value is. Next, based on these descriptor weights, the similarity score between the target tracklet with its candidate is computed. In the last step, tracklets are fused to a long trajectory by Hungarian algorithm with the optimization of global similarity scores.

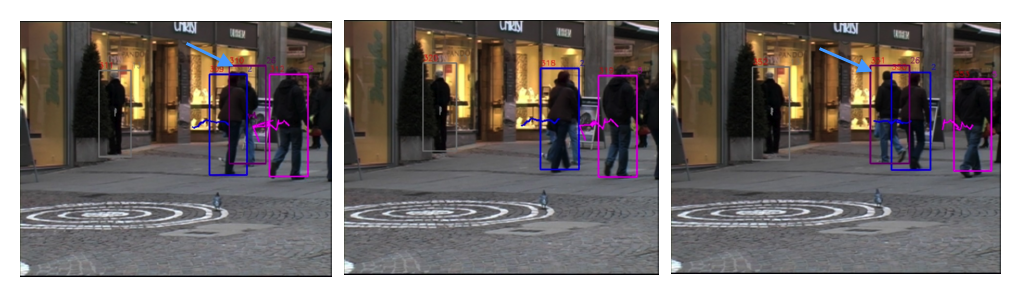

The proposed approach gets results of tracker in [63] as input and is tested on challenge datasets. This approach achieves comparable results with other trackers from the state of the art. Figure 1 shows that the tracklet keeps its ID even when occlusion occurs. Table 1 shows the better performance of this approach compared to other trackers from the state of the art.

|

| Dataset | Method | MT(%) | PT(%) | ML(%) |

| TUD-Stadtmitte | [57] | 60.0 | 30.0 | 10.0 |

| TUD-Stadtmitte | [30] | 70.0 | 10.0 | 20.0 |

| TUD-Stadtmitte | [71] | 70.0 | 30.0 | 0.0 |

| TUD-Stadtmitte | [95] | 70.0 | 30.0 | 0.0 |

| TUD-Stadtmitte | Ours | 70.0 | 30.0 | 0.0 |

| TUD-Crossing | [89] | 53.8 | 38.4 | 7.8 |

| TUD-Crossing | Ours | 53.8 | 46.2 | 0.0 |